Brains in a Vat

By Hilary Putnam

(from Reason, Truth, and History,

chapter 1, pp. 1-21)

An ant is crawling on a

patch of sand. As it crawls, it traces a line in the sand. By pure chance

the line that it traces curves and recrosses itself in such a way that it

ends up looking like a recognizable caricature of Winston Churchill. Has

the ant traced a picture of Winston Churchill, a picture that depicts Churchill?

Most people would say,

on a little reflection, that it has not. The ant, after all, has never seen

Churchill, Or even a picture of Churchill, arid it had no intention of

depicting Churchill. It simply traced a line (and even that was unintentional), a line that we can 'see as' a picture of

Churchill.

We can express this by

saying that the line is not 'in itself' a representation1

of anything rather than anything else. Similarity (of a certain very

complicated sort) to the features of Winston Churchill is not sufficient to

make something represent or refer to Churchill. Nor is it necessary: in our

community the printed shape 'Winston Churchill', the spoken words 'Winston

Churchill', and many other things are used to represent Churchill (though

not pictorially), while not having the sort of similarity to Churchill that

a picture even a line drawing has. If similarity is not necessary or sufficient to make

something represent something else, how can anything be necessary or sufficient for this purpose?

How on earth can one thing represent (or 'stand for', etc.) a different

thing?

The answer may seem easy. Suppose the ant had seen

Winston Churchill, and suppose that it had the intelligence and skill to

draw a picture of him. Suppose it produced the caricature intentionally. Then the line would

have represented Churchill. The answer may seem easy. Suppose the ant had seen

Winston Churchill, and suppose that it had the intelligence and skill to

draw a picture of him. Suppose it produced the caricature intentionally. Then the line would

have represented Churchill.

On the other hand,

suppose the line had the shape WINSTON CHURCHILL. And suppose this was just

accident (ignoring the improbability involved). Then the 'printed shape'

WINSTON CHURCHILL would not

have represented Churchill, although that printed shape does represent

Churchill when it occurs in almost any book today.

So it may seem that

what is necessary for representation, or what is mainly necessary for

representation, is intention.

But to have the

intention that anything,

even private language (even the words 'Winston Churchill' spoken in my mind

and not out loud), should represent Churchill, I must have been able to

think about Churchill in the first place. If lines in the sand, noises,

etc., cannot 'in themselves' represent anything, then how is it that

thought forms can 'in themselves' represent any thing? Or can they? How can

thought reach out and 'grasp' what is external?

Some philosophers have,

in the past, leaped from this sort of consideration to what they take to be

a proof that the mind is essentially

non-physical in nature. The argument is simple; what we said

about the ant's curve applies to any physical object. No physical object

can, in itself, refer to one thing rather than to another; nevertheless, thoughts in the mind obviously do

succeed in referring to one thing rather than another. So thoughts (and

hence the mind) are of an essentially different nature than physical

objects. Thoughts have the characteristic of intentionality they can refer to something else;

nothing physical has 'intentionality', save as that intentionality is derivative

from some employment of that physical thing by a mind. Or so it is claimed.

This is too quick; just postulating mysterious powers of mind solves

nothing. But the problem is very real. How is intentionality, reference,

possible?

Magical theories of reference

We saw that the ant's 'picture' has no necessary

connection with Winston Churchill. The mere fact that the 'picture' bears a

'resemblance' to Churchill does nor make it into a real picture, nor does

it make it a representation of Churchill. Unless the ant is an intelligent

ant (which it isn't) and knows about Churchill (which it doesn't), the

curve it traced is not a picture or even a representation of anything. Some

primitive people believe that some representations (in particular, names) have a necessary connection

with their bearers; that to know the 'true name' of someone or something

gives one power over it. This power comes from the magical connection between the name

and the bearer of the name; once one realizes that a name only has a contextual, contingent,

conventional connection with its bearer, it is hard to see why knowledge of

the name should have any mystical significance. We saw that the ant's 'picture' has no necessary

connection with Winston Churchill. The mere fact that the 'picture' bears a

'resemblance' to Churchill does nor make it into a real picture, nor does

it make it a representation of Churchill. Unless the ant is an intelligent

ant (which it isn't) and knows about Churchill (which it doesn't), the

curve it traced is not a picture or even a representation of anything. Some

primitive people believe that some representations (in particular, names) have a necessary connection

with their bearers; that to know the 'true name' of someone or something

gives one power over it. This power comes from the magical connection between the name

and the bearer of the name; once one realizes that a name only has a contextual, contingent,

conventional connection with its bearer, it is hard to see why knowledge of

the name should have any mystical significance.

What is important to

realize is that what goes for physical pictures also goes for mental

images, and for mental representations in general; mental representations

no more have a necessary connection with what they represent than physical

representations do. The contrary supposition is a survival of magical

thinking.

Perhaps the point is

easiest to grasp in the case of mental images.

(Perhaps the first philosopher to grasp the enormous significance of this

point, even if he was not the first to actually make it, was Wittgenstein.)

Suppose there is a planet somewhere on which human beings have evolved (or

been deposited by alien spacemen, or what have you). Suppose these humans,

although otherwise like us, have never seen trees. Suppose they have never imagined trees (perhaps

vegetable life exists on their planet only in the form of molds). Suppose

one day a picture of a tree is accidentally dropped on their planet by a

spaceship which passes on without having other contact with them. Imagine

them puzzling over the picture. What in the world is this? All sorts of

speculations occur to them: a building, a canopy, even an animal of some

kind. But suppose they never come close to the truth.

For us the picture is a representation of

a tree. For these humans the picture only represents a strange object,

nature and function unknown. Suppose one of them has a mental image which

is exactly like one of my mental images of a tree as a result of having

seen the picture. His mental image is not a representation of a tree. It is only a representation of

the strange object (whatever it is) that the mysterious picture represents.

Still, someone might

argue that the mental image is in fact

a representation of a tree, if only because the picture which caused this

mental image was itself a representation of a tree to begin with. There is

a causal chain from actual trees to the mental image even if it is a very

strange one.

But even this causal

chain can be imagined absent. Suppose the 'picture of the tree' that the

spaceship dropped was not really a picture of a tree, but the accidental

result of some spilled paints. Even if it looked exactly like a picture of

a tree, it was, in truth, no more a picture of a tree than the ant's

'caricature' of Churchill was a picture of Churchill. We can even imagine

that the spaceship which dropped the 'picture' came from a planet which

knew nothing of trees. Then the humans would still have mental images

qualitatively identical with my image of a tree, but they would not be

images which represented a tree any more than anything else.

The same thing is true

of words. A discourse on

paper might seem to be a perfect description of trees, but if it was

produced by monkeys randomly hitting keys on a typewriter for millions of

years, then the words do not refer to anything. If there were a person who

memorized those words and said them in his mind without understanding them,

then they would not refer to anything when thought in the mind, either.

Imagine the person who

is saying those words in his mind has been hypnotized. Suppose the words

are in Japanese, and the person has been told that he understands Japanese.

Suppose that as he thinks those words he has a 'feeling of understanding'.

(Although if someone broke into his train of thought and asked him, what

the words he was thinking meant,

he would discover he couldn't say.) Perhaps the illusion would be so perfect

that the person could even fool a Japanese telepath! But if he couldn't use

the words in the right contexts, answer questions about what he 'thought',

etc., then he didn't understand them.

By combining these

science fiction stories I have been telling, we can contrive a case in

which someone thinks words which are in fact a description of trees in some

language and simultaneously

has appropriate mental images, but neither

understands the words nor

knows what a tree is. We can even imagine that the mental images were

caused by paint-spills (although the person has been hypnotized to think

that they are images of some thing appropriate to his thought only, if he

were asked, he wouldn't be able to say of what). And we can imagine that

the language the person is thinking in is one neither the hypnotist nor the

person hypnotized has ever heard of perhaps it is just coincidence that

these 'nonsense sentences', as the hypnotist supposes them to be, are a

description of trees in Japanese. In short, everything passing before the

person's mind might be qualitatively identical with what was passing

through the mind of a Japanese speaker who was really thinking about trees but none of it would refer

to trees.

All of this is really

impossible, of course, in the way that it is really impossible that monkeys

should by chance type out a copy of Hamlet.

That is to say that the probabilities against it are so high as to mean it

will never really happen (we think). But it is not logically impossible, or

even physically impossible. It could

happen (compatibly with physical law and, perhaps, compatibly with actual

conditions in the universe, if there are lots of intelligent beings on

other planets). And if it did happen, it would be a striking demonstration

of an important conceptual truth that even a large and complex system of

representations, both verbal and visual, still does not have an intrinsic, built-in, magical

connection with what it represents a connection independent of how it was

caused and what the dispositions of the speaker or thinker are. And this is

true whether the system of representations (words and images, in the case

of the example) is physically realized the words are written or spoken,

and the pictures are physical pictures or only realized in the mind.

Thought words and mental pictures do not intrinsically

represent what they are about.

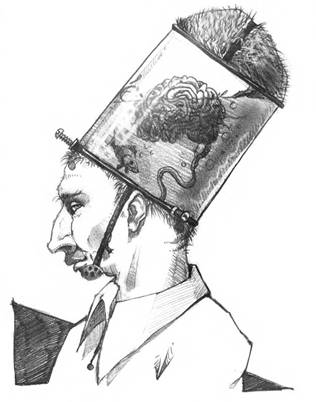

The case of the brains

in a vat

Here is a science

fiction possibility discussed by philosophers: imagine that a human being

(you can imagine this to be yourself) has been subjected to an operation by

an evil scientist. The person's brain (your brain) has been removed from

the body and placed in a vat of nutrients which keeps the brain alive. The

nerve endings have been connected to a super-scientific computer which

causes the person whose brain it is to have the illusion that everything is

perfectly normal. There seem to be people, objects, the sky, etc.; but

really, all the person (you) is experiencing is the result of electronic

impulses traveling from the computer to the nerve endings. The computer is

so clever that if the person tries to raise his hand, the feedback from the

computer will cause him to 'see' and 'feel' the hand being raised.

Moreover, by varying the program, the evil scientist can cause the victim

to 'experience' (or hallucinate) any situation or environment the evil

scientist wishes. He can also obliterate the memory of the brain operation,

so that the victim will seem to himself to have always been in this

environment. It can even seem to the victim that he is sitting and reading

these very words about the amusing but quite absurd supposition that there

is an evil scientist who removes people's brains from their bodies and

places them in a vat of nutrients which keep the brains alive. The nerve

endings are supposed to be connected to a super-scientific computer which

causes the person whose brain it is to have the illusion that...

When this sort of

possibility is mentioned in a lecture on the Theory of Knowledge, the

purpose, of course, is to raise the classical problem of skepticism with

respect to the external world in a modern way. (How do you know you aren't in this predicament?) But

this predicament is also a useful device for raising issues about the

mind/world relationship.

Instead of having just

one brain in a vat, we could imagine that all human beings (perhaps all

sentient beings) are brains in a vat (or nervous systems in a vat in case

some beings with just a minimal nervous system already count as

'sentient'). Of course, the evil scientist would have to be outside or

would he? Perhaps there is no evil scientist, perhaps (though this is

absurd) the universe just happens to consist of automatic machinery tending

a vat full of brains and nervous systems.

This time let us suppose

that the automatic machinery is programmed to give us all a collective hallucination, rather than

a number of separate unrelated hallucinations. Thus, when I seem to myself

to be talking to you, you seem to yourself to be hearing my words. Of course,

it is not the case that my words actually reach your ears for you don't

have (real) ears, nor do I have a real mouth and tongue. Rather, when I

produce my words, what happens is that the efferent impulses travel from my

brain to the computer, which both causes me to 'hear' my own voice uttering

those words and 'feel' my tongue moving, etc., and causes you to 'hear' my

words, 'see' me speaking, etc. In this case, we are, in a sense, actually

in communication. I am not mistaken about your real existence (only about

the existence of your body and the 'external world', apart from brains).

From a certain point of view, it doesn't even matter that 'the whole world'

is a collective hallucination; for you do, after all, really hear my words

when I speak to you, even if the mechanism isn't what we suppose it to be.

(Of course, if we were two lovers making love, rather than just two people

carrying on a conversation, then the suggestion that it was just two brains

in a vat might be disturbing.)

I want now to ask a

question which will seem very silly and obvious (at least to some people,

including some very sophisticated philosophers), but which will take us to

real philosophical depths rather quickly. Suppose this whole story were

actually true. Could we, if we were brains in a vat in this way, say or think that we were?

I am going to argue

that the answer is 'No, we couldn't.' In fact, I am going to argue that the

supposition that we are actually brains in a vat, although it violates no

physical law, and is perfectly consistent with everything we have

experienced, can not possibly be true. It

cannot possibly be true, because it is, in a certain way,

self-refuting.

The argument I am going

to present is an unusual one, and it took me several years to convince myself

that it is really right. But it is a correct argument. What makes it seem

so strange is that it is connected with some of the very deepest issues in

philosophy. (It first occurred to me when I was thinking about a theorem in

modern logic, the 'Skolem-Lφwenheim Theorem', and I suddenly saw a

connection between this theorem and some arguments in Wittgenstein's Philosophical Investigations.)

A 'self-refuting

supposition' is one whose truth implies its own falsity. For example,

consider the thesis that all general statements are false. This is a

general statement. So if it is true, then it must be false. Hence, it is

false. Sometimes a thesis is called 'self-refuting' if it is the supposition that the thesis is entertained or

enunciated that implies its falsity. For example, 'I do not

exist' is self-refuting if thought by me

(for any 'me'). So one can

be certain that one oneself exists, if one thinks about it (as Descartes

argued).

What I shall show is

that the supposition that we are brains in a vat has just this property. If

we can consider whether it is true or false, then it is not true (I shall

show). Hence it is not true.

Before I give the

argument, let us consider why it seems so strange that such an argument can

be given (at least to philosophers who subscribe to a 'copy' conception of

truth). We conceded that it is compatible with physical law that there

should be a world in which all sentient beings are brains in a vat. As

philosophers say, there is a 'possible world' in which all sentient beings

are brains in a vat. (This 'possible world' talk makes it sound as if there

is a place where any absurd

supposition is true, which is why it can be very misleading in philosophy.)

The humans in that possible world have exactly the same experiences that we do. They think the same thoughts

we do (at least, the same words, images, thought-forms, etc., go through

their minds). Yet, I am claiming that there is an argument we can give that

shows we are not brains in a vat. How can there be? And why couldn't the people

in the possible world who really are brains in a vat give it too?

The answer is going to

be (basically) this: although the people in that possible world can think

and 'say' any words we can think and say, they cannot (I claim) refer to what we can refer to. In

particular, they cannot think or say that they are brains in a vat (even by thinking 'we are brains in a vat').

Turing's test

Suppose someone

succeeds in inventing a computer which can actually carry on an intelligent

conversation with one (on as many subjects as an intelligent person might).

How can one decide if the computer is 'conscious'?

The British logician

Alan Turing proposed the following test:2 let someone carry on a

conversation with the computer and a conversation with a person whom he

does not know. If he cannot tell which is the computer and which is the

human being, then (assume the test to be repeated a sufficient number of

times with different interlocutors) the computer is conscious. In short, a

computing machine is conscious if it can pass the 'Turing Test'. (The

conversations are not to be carried on face to face, of course, since the

interlocutor is not to know the visual appearance of either of his two

conversational partners. Nor is voice to used, since the mechanical voice

might simply sound different from a human voice. Imagine, rather, that the

conversations are all carried on via electric typewriter. The interlocutor

types in his statements, questions, etc., and the two partners the

machine and the person respond via the electric keyboard. Also, the

machine may lie asked 'Are you a machine', it might reply, 'No, I'm an

assistant in the lab here.')

The idea that this test

is really a definitive test of consciousness has been criticized by a

number of authors (who are by no means hostile in principle to the idea

that a machine might be conscious). But this is not our topic at this time.

I wish to use the general idea of the Turing test, the general idea of a dialogic test of competence, for a

different purpose, the purpose of exploring the notion of reference.

Imagine a situation in

which the problem is not to determine if the partner is really a person or

a machine, but is rather to determine if the partner uses the words to

refer as we do. The obvious test is, again, to carry on a conversation,

and, if no problems arise, if the partner 'passes' in the sense of being

indistinguishable from someone who is certified in advance to be speaking

the same language, referring to the usual sorts of objects, etc., to

conclude that the partner does refer to objects as we do. When the purpose

of the Turing test is as just described, that is, to determine the

existence of (shared) reference, I shall refer to the test as the Turing Test for Reference. And, just

as philosophers have discussed the question whether the original Turing

test is a definitive test

for consciousness, i.e. the question of whether a machine which 'passes'

the test not just once but regularly is necessarily

conscious, so, in the same way, I wish to discuss the question of whether

the Turing Test for Reference just suggested is a definitive test for

shared reference.

The answer will turn

out to be 'No'. The Turing Test for Reference is not definitive. It is

certainly an excellent test in practice; but it is not logically impossible

(though it is certainly highly improbable) that someone could pass the

Turing Test for Reference and not be referring to anything. It follows from

this, as we shall see, that we can extend our observation that words (and

whole texts and discourses) do not have a necessary connection to their

referents. Even if we consider not words by themselves but rules deciding

what words may appropriately be produced in certain contexts even if we

consider, in computer jargon, programs

for using words unless those programs themselves refer to something extralinguistic

there is still no determinate reference that those words possess. This will

be a crucial step in the process of reaching the conclusion that the

Brain-in-a-Vat Worlders cannot refer to anything external at all (and hence

can not say that they are

Brain-in-a-Vat Worlders).

Suppose, for example,

that I am in the Turing situation (playing the 'Imitation Game', in

Turing's terminology) and my partner is actually a machine. Suppose this

machine is able to win the game ('passes' the test). Imagine the machine to

be programmed to produce beautiful responses in English to statements,

questions, remarks, etc. in English, but that it has no sense organs (other

than the hookup to my electric typewriter), and no motor organs (other than

the electric typewriter). (As far as I can make out, Turing does not assume

that the possession of either sense organs or motor organs is necessary for

consciousness or intelligence.) Assume that not only does the machine lack

electronic eyes and ears, etc., but that there are no provisions in the

machine's program, the program for playing the Imitation Game, for

incorporating inputs from such sense organs, or for controlling a body.

What should we say about such a machine?

To me, it seems evident

that we cannot and should not attribute reference to such a device. It is

true that the machine can discourse beautifully about, say, the scenery in New England. But it could not recognize an apple tree

or an apple, a mountain or a cow, a field or a steeple, if it were in front

of one.

What we have is a device for producing sentences in

response to sentences. But none of these sentences is at all connected to

the real world. If one coupled two of

these machines and let them play the Imitation Game with each other, then

they would go on 'fooling' each other forever, even if the rest of the

world disappeared! There is no more reason to regard the

machine's talk of apples as referring to real world apples than there is to

regard the ant's 'drawing' as referring to Winston Churchill. What we have is a device for producing sentences in

response to sentences. But none of these sentences is at all connected to

the real world. If one coupled two of

these machines and let them play the Imitation Game with each other, then

they would go on 'fooling' each other forever, even if the rest of the

world disappeared! There is no more reason to regard the

machine's talk of apples as referring to real world apples than there is to

regard the ant's 'drawing' as referring to Winston Churchill.

What produces the

illusion of reference, meaning, intelligence, etc., here is the fact that

there is a convention of representation which we have under which the

machine's discourse refers to apples, steeples, New

England, etc. Similarly, there is the illusion that the ant has caricatured

Churchill, for the same reason. But we are able to perceive, handle, deal

with apples and fields. Our talk of apples and fields is intimately connected

with our non-verbal transactions with apples and fields. There are

'language entry rules' which take us from experiences of apples to such

utterances as 'I see an apple', and 'language exit rules' which take us

from decisions expressed in linguistic form ('I am going to buy some

apples') to actions other than speaking. Lacking either language entry

rules or language exit rules, there is no reason to regard the conversation

of the machine (or of the two machines, in the case we envisaged of two

machines playing the Imitation Game with each other) as more than syntactic

play. Syntactic play that resembles

intelligent discourse, to be sure; but only as (and no more than) the ant's

curve resembles a biting caricature.

In the case of the ant,

we could have argued that the ant would have drawn the same curve even if

Winston Churchill had never existed. In the case of the machine, we cannot

quite make the parallel argument; if apples, trees, steeples and fields had

not existed, then, presumably, the programmers would not have produced that

same program. Although the machine does nor perceive apples, fields, or steeples, its

creator-designers did. There is some

causal connection between the machine and the real world apples, etc., via

the perceptual experience and knowledge of the creator-designers. But such

a weak connection can hardly suffice for reference. Not only is it

logically possible, though fantastically improbable, that the same machine could have existed even if apples,

fields, and steeples had not existed; more important, the machine is

utterly insensitive to the continued

existence of apples, fields, steeples, etc. Even if all these things ceased to exist, the machine would

still discourse just as happily in the same way. That is why the machine cannot

be regarded as referring at all.

The point that is

relevant for our discussion is that there is nothing in Turing's Test to

rule out a machine which is programmed to do nothing but play the Imitation Game, and that

a Machine which can do nothing but

play the Imitation Game is clearly

not referring any more than a record player is.

Brains in a vat

(again)

Let us compare the

hypothetical 'brains in a vat' with the machines just described. There are

obviously important differences. The brains in a vat do not have sense

organs, but they do have provision

for sense organs; that is, there are afferent nerve endings, there are

inputs from these afferent nerve endings, and these inputs figure in the

'program' of the brains in the vat just as they do in the program of our

brains. The brains in a vat are brains;

moreover, they are functioning

brains, and they function by the same rules as brains do in the actual

world. For these reasons, it would seem absurd to deny consciousness or

intelligence to them. But the fact that they are conscious and intelligent

does not mean that their words refer to what our words refer. The question

we are interested in is this: do their verbalizations containing, say, the

word 'tree' actually refer to trees?

More generally: can they refer to external

objects at all? (As opposed to, for example, objects in the image produced

by the automatic machinery.)

To fix our ideas, let

us specify that the automatic machinery is supposed to have come into

existence by some kind of cosmic chance or coincidence (or, perhaps, to

have always existed). In this hypothetical world, the automatic machinery

itself is supposed to have no intelligent creator-designers. In fact, as we

said at the beginning of this chapter, we may imagine that all sentient

beings (however minimal their sentience) are inside the vat.

This assumption does

not help. For there is no connection between the word 'tree' as used by these brains

and actual trees. They would still use the word 'tree' just as they do, think

just the thoughts they do, have just the images they have, even if there

were no actual trees. Their images, words, etc., are qualitatively

identical with images, words, etc., which do represent trees in our world; but we have already seen

(the ant again!) that qualitative similarity to something which represents

an object (Winston Churchill or a tree) does not make a thing a

representation itself. In short, the brains in a vat are not thinking about

real trees when they think 'there is a tree in front of me' because there

is nothing by virtue of which their thought 'tree' represents actual trees.

If this seems hasty,

reflect on the following: we have seen that the words do not necessarily

refer to trees even if they are arranged in a sequence which is identical

with a discourse which (were it to occur in one of our minds) would

unquestionably be about trees

in the actual world. Nor does the 'program', in the sense of the rules,

practices, dispositions of the brains to verbal behavior, necessarily refer

to trees or bring about reference to trees through the connections it

establishes between words and words, or linguistic

cues and linguistic

responses. If these brains think about, refer to, represent trees (real

trees, outside the vat), then it must be because of the way the program

connects the system of language to non-verbal

input and outputs. There are indeed such non-verbal inputs and outputs in

the Brain-in-a-Vat world (those efferent and afferent nerve endings

again!), but we also saw that the 'sense-data' produced by the automatic

machinery do not represent trees (or anything external) even when they

resemble our tree images exactly. Just as a splash of paint might resemble

a tree picture without being

a tree picture, so, we saw, a 'sense datum' might be qualitatively

identical with an 'image of a tree' without being an image of a tree. How

can the fact that, in the case of the brains in a vat, the language is

connected by the program with sensory inputs which do not intrinsically or

extrinsically represent trees (or anything external) possibly bring it

about that the whole system of representations, the language in use, does refer to or represent trees or

any thing external?

The answer is that it

cannot. The whole system of sense-data, motor signals to the efferent

endings, and verbally or conceptually mediated thought connected by

'language entry rules' to the sense-data (or whatever) as inputs and by

'language exit rules' to the motor signals as outputs, has no more

connection to trees than

the ant's curve has to Winston Churchill. Once we see that the qualitative similarity (amounting, if

you like, to qualitative identity) between the thoughts of the brains in a

vat and the thoughts of someone in the actual world by no means implies sameness

of reference, it is not hard to see that there is no basis at all for

regarding the brain in a vat as referring to external things.

The premises of the

argument

I have now given the

argument promised to show that the brains in a vat cannot think or say that

they are brains in a vat. It remains only to make it explicit and to

examine its structure.

By what was just said,

when the brain in a vat (in the world where every sentient being is and

always was a brain in a vat) thinks 'There is a tree in front of me', his

thought does not refer to actual trees. On some theories that we shall

discuss it might refer to trees in the image, or to the electronic impulses

that cause tree experiences, or to the features of the program that are

responsible for those electronic impulses. These theories are not ruled out

by what was just said, for there is a close causal connection between the

use of the word 'tree' in vat-English and the presence of trees in the

image, the presence of electronic impulses of a certain kind, and the

presence of certain features in the machine's program. On these theories

the brain is right, not wrong in thinking 'There is a tree in

front of me.' Given what 'tree' refers to in vat-English and what 'in front

of' refers to, assuming one of these theories is correct, then the truth

conditions for 'There is a tree in front of me' when it occurs in

vat-English are simply that a tree in the image be 'in front of' the 'me'

in question in the image or, perhaps, that the kind of electronic impulse

that normally produces this experience be coming from the automatic

machinery, or, perhaps, that the feature of the machinery that is supposed

to produce the 'tree in front of one' experience be operating. And these

truth conditions are certainly fulfilled.

By the same argument,

'vat' refers to vats in the image in vat-English, or something related

(electronic impulses or program features), but certainly not to real vats,

since the use of 'vat' in vat-English has no causal connection to real vats

(apart from the connection that the brains in a vat wouldn't be able to use

the word 'vat', if it were not for the presence of one particular vat the

vat they are in; but this connection obtains between the use of every word in vat-English and that

one particular vat; it is not a special connection between the use of the particular word 'vat' and vats).

Similarly, 'nutrient fluid' refers to a liquid in the image in vat-English,

or something related (electronic impulses or program features). It follows

that if their 'possible world' is really the actual one, and we are really

the brains in a vat, then what we now mean by 'we are brains in a vat' is

that we are brains in a vat in the

image or something of that kind (if we mean any thing at all).

But part of the hypothesis that we are brains in a vat is that we aren't

brains in a vat in the image (i.e. what we are 'hallucinating' isn't that

we are brains in a vat). So, if we are brains in a vat, then the sentence

'We are brains in a vat' says something false (if it says anything). In

short, if we are brains in a vat, then 'We are brains in a vat' is false.

So it is (necessarily) false.

The supposition that

such a possibility makes sense arises from a combination of two errors: (1)

taking physical possibility

too seriously; and (2) unconsciously operating with a magical theory of

reference, a theory on which certain mental representations necessarily

refer to certain external things and kinds of things.

There is a 'physically

possible world' in which we are brains in a vat what does this mean

except that there is a description

of such a state of affairs which is compatible with the laws of physics?

Just as there is a tendency in our culture (and has been since the

seventeenth century) to take physics

as our metaphysics, that is, to view the exact sciences as the long-sought

description of the 'true and ultimate furniture of the universe', so there

is, as an immediate consequence, a tendency to take 'physical possibility'

as the very touchstone of what might really actually be the case. Truth is

physical truth; possibility physical possibility; and necessity physical

necessity, on such a view. But we have just seen, if only in the case of a

very contrived example so far, that this view is wrong. The existence of a

'physically possible world' in which we are brains in a vat (and always

were and will be) does not mean that we might really, actually, possibly be brains in a vat. What rules out

this possibility is not physics but philosophy.

Some philosophers,

eager both to assert and minimize the claims of their profession at the

same time (the typical state of mind of Anglo-American philosophy in the

twentieth century), would say: 'Sure. You have shown that some things that

seem to be physical possibilities are really conceptual impossibilities. What's so surprising about

that?'

Well, to be sure, my

argument can be described as a 'conceptual' one. But to describe

philosophical activity as the search for 'conceptual' truths makes it all

sound like inquiry about the

meaning of words. And that

is not at all what we have been engaging in.

What we have been doing

is considering the preconditions

for thinking about, representing, referring to, etc. We have

investigated these preconditions not

by investigating the meaning of these words and phrases (as a linguist

might, for example) but by reasoning a

priori. Not in the old 'absolute' sense (since we don't claim

that magical theories of reference are a

priori wrong), but in the sense of inquiring into what is reasonably possible assuming certain general premises, or

making certain very broad theoretical assumptions. Such a procedure is

neither 'empirical' nor quite 'a priori', but has elements of both ways of

investigating. In spite of the fallibility of my procedure, and its dependence

upon assumptions which might be described as 'empirical' (e.g. the

assumption that the mind has no access to external things or properties

apart from that provided by the senses), my procedure has a close relation

to what Kant called a 'transcendental' investigation; for it is an

investigation, I repeat, of the preconditions

of reference and hence of thought preconditions built in to the nature of

our minds themselves, though not (as Kant hoped) wholly independent of

empirical assumptions.

One of the premises of

the argument is obvious: that magical theories of reference are wrong,

wrong for mental representations and not only for physical ones. The other

premises is that one cannot refer to certain kinds of things, e.g. trees, if one has no causal

interaction at all with them,3 or with things in terms of

which they can be described. But why should we accept these premises? Since

these constitute the broad framework within which I am arguing, it is time

to examine them more closely.

The reasons for

denying necessary connections between representations and their referents

I mentioned earlier

that some philosophers (most famously, Brentano) have ascribed to the mind

a power, 'intentionality', which precisely enables it to refer. Evidently, I have rejected

this as no solution. But what gives me this right? Have I, perhaps, been

too hasty?

These philosophers did

not claim that we can think about external things or properties without

using representations at all. And the argument I gave above comparing

visual sense data to the ant's 'picture' (the argument via the science

fiction story about the 'picture' of a tree that came from a paint-splash

and that gave rise to sense data qualitatively similar to our 'visual

images of trees', but unaccompanied by any concept of a tree) would be accepted as showing that images do not necessarily refer. If

there are mental representations that necessarily refer (to external

things) they must be of the nature of concepts

and not of the nature of images. But what are concepts?

When we introspect we

do not perceive 'concepts' flowing through our minds as such. Stop the

stream of thought when or where we will, what we catch are words, images,

sensations, feelings. When I speak my thoughts out loud I do not think them

twice. I hear my words as you do. To be sure it feels different to me when

I utter words that I believe and when I utter words I do not believe (but

sometimes, when I am nervous, or in front of a hostile audience, it feels

as if I am lying when I know I am telling the truth); and it feels

different when I utter words I understand and when I utter words I do not

understand. But I can imagine without difficulty someone thinking just

these words (in the sense of saying them in his mind) and having just the

feeling of understanding, asserting, etc., that I do, and realizing a

minute later (or on being awakened by a hypnotist) that he did not

understand what had just passed through his mind at all, that he did not

even understand the language these words are in. I don't claim that this is

very likely; I simply mean that there is nothing at all unimaginable about

this. And what this shows is not that concepts are words (or images, sensations, etc.), but that to

attribute a 'concept' or a 'thought' to someone is quite different from

attributing any mental 'presentation', any introspectible entity or event,

to him. Concepts are not mental presentations that intrinsically refer to

external objects for the very decisive reason that they are not mental

presentations at all. Concepts are signs used in a certain way; the signs

may be public or private, mental entities or physical entities, but even

when the signs are 'mental' and 'private', the sign itself apart from its

use is not the concept. And signs do not themselves intrinsically refer.

We can see this by

performing a very simple thought experiment. Suppose you are like me and

cannot tell an elm tree from a beech tree. We still say that the reference

of 'elm' in my speech is the same as the reference of 'elm' in anyone

else's, viz, elm trees, and that the set of all beech trees is the

extension of 'beech' (i.e. the set of things the word 'beech' is truly

predicated of) both in your speech and my speech. Is it really credible

that the difference between what 'elm' refers to and what 'beech' refers to

is brought about by a difference in our concepts?

My concept of an elm tree is exactly the same as my concept of a beech tree

(I blush to confess). (This shows that the determination of reference is

social and not individual, by the way; you and I both defer to experts who can tell elms from beeches.) If

someone heroically attempts to maintain that the difference between the

reference of 'elm' and the reference of 'beech' in my speech is explained

by a difference in my psychological state, then let ham imagine a Twin

Earth where the words are switched. Twin Earth is very much like Earth; in

fact, apart from the fact that 'elm' and 'beech' are interchanged, the

reader can suppose Twin Earth is exactly like Earth. Suppose I have a Doppelganger on Twin Earth who is

molecule for molecule identical with me (in the sense in which two neckties

can be 'identical'). If you are a dualist, then suppose my Doppelganger thinks the same

verbalized thoughts I do, has the same sense data, the same dispositions,

etc. It is absurd to think his psychological state is one bit different

from mine: yet his word 'elm' represents beeches,

and my word 'elm' represents elms. (Similarly, if the 'water' on Twin Earth

is a different liquid say, XYZ and not H2O then 'water'

represents a different liquid when used on Twin Earth and when used on

Earth, etc.) Contrary to a doctrine that has been with us since the

seventeenth century, meanings just

aren't in the head.

We have seen that

possessing a concept is not a matter of possessing images (say, of trees

or even images, 'visual' or 'acoustic', of sentences, or whole discourses,

for that matter) since one could possess any system of images you please

and not possess the ability to use the sentences in situationally

appropriate ways (considering both linguistic factors what has been said

before and non-linguistic factors as determining 'situational

appropriateness'). A man may have all the images you please, and still be

completely at a loss when one says to him 'point to a tree', even if a lot

of trees are present. He may even have the image of what he is supposed to

do, and still not know what he is supposed to do. For the image, if not

accompanied by the ability to act in a certain way, is just a picture, and

acting in accordance with a picture is itself an ability that one may or

may not have. (The man might picture himself pointing to a tree, but just

for the sake of contemplating something logically possible; himself

pointing to a tree after someone has produced the to him meaningless

sequence of sounds 'please point to a tree'.) He would still not know that

he was supposed to point to a tree, and he would still not understand 'point to a tree'.

I have considered the

ability to use certain sentences to be the criterion for possessing a

full-blown concept, but this could easily be liberalized. We could allow

symbolism consisting of elements which are not words in a natural language,

for example, and we could allow such mental phenomena as images and other

types of internal events. What is essential is that these should have the

same complexity, ability to be combined with each other, etc., as sentences

in a natural language. For, although a particular presentation say, a

blue flash might serve a particular mathematician as the inner expression

of the whole proof of the Prime Number Theorem, still there would be no

temptation to say this (and it would be false to say this) if that

mathematician could not unpack his 'blue flash' into separate steps and

logical connections. But, no matter what sort of inner phenomena we allow

as possible expressions of

thought, arguments exactly similar to the foregoing will show that it is

not the phenomena themselves that constitute understanding, but rather the

ability of the thinker to employ

these phenomena, to produce the right phenomena in the right circumstances.

The foregoing is a very

abbreviated version of Wittgenstein's argument in Philosophical Investigations. If it

is correct, then the attempt to understand thought by what is called

'phenomenological' investigation is fundamentally misguided; for what the

phenomenologists fail to see is that what they are describing is the inner expression of thought, but that the understanding of that expression

one's understanding of one's own thoughts is not an occurrence but an ability. Our example of a man

pretending to think in Japanese (and deceiving a Japanese telepath) already

shows the futility of a phenomenological approach to the problem of understanding. For even if there is

some introspectible quality which is present when and only when one really understands (this seems false

on introspection, in fact), still that quality is only correlated with understanding, and it

is still possible that the man fooling the Japanese telepath have that

quality too and still not

understand a word of Japanese.

On the other hand,

consider the perfectly possible man who does not have any 'interior

monologue' at all. He speaks perfectly good English, and if asked what his

opinions are on a given subject, he will give them at length. But he never

thinks (in words, images, etc.) when he is not speaking out loud; nor does

anything 'go through his head', except that (of course) he hears his own

voice speaking, and has the usual sense impressions from his surroundings,

plus a general 'feeling of understanding'. (Perhaps he is in the habit of

talking to himself.) When he types a letter or goes to the store, etc., he

is not having an internal 'stream of thought'; but his actions are

intelligent and purposeful, and if anyone walks up and asks him 'What are

you doing?' he will give perfectly coherent replies.

This man seems

perfectly imaginable. No one would hesitate to say that he was conscious,

disliked rock and roll (if he frequently expressed a strong aversion to

rock and roll), etc., just because he did not think conscious thoughts

except when speaking out loud.

What follows from all

this is that (a) no set of mental events images or more 'abstract' mental

happenings and qualities constitutes

understanding; and (b) no set of mental events is necessary for understanding. In

particular, concepts cannot be

identical with mental objects of any kind. For, assuming that

by a mental object we mean something introspectible, we have just seen that

whatever it is, it may be absent in a man who does understand the

appropriate word (and hence has the full blown concept), and present in a

man who does not have the concept at all.

Coming back now to our

criticism of magical theories of reference (a topic which also concerned

Wittgenstein), we see that, on the one hand, those 'mental objects' we can introspectively detect words,

images, feelings, etc. do not intrinsically refer any more than the ant's

picture does (and for the same reasons), while the attempts to postulate

special mental objects, 'concepts', which do have a necessary connection with their referents, and

which only trained phenomenologists can detect, commit a logical blunder; for concepts are (at

least in part) abilities

and not occurrences. The doctrine that there are mental presentations which

necessarily refer to external things is not only bad natural science; it is

also bad phenomenology and conceptual confusion.

Endnotes

1

In this book the terms 'representation' and

'reference' always refer to a relation between a word (or other sort of

sign, symbol, or representation) and something that actually exists (i.e.

not just an 'object of thought'). There is a sense of 'refer' in which I

can 'refer' to what does not exist; this is not the sense in which 'refer'

is used here. An older word for what I call 'representation' or 'reference'

is denotation. 1

In this book the terms 'representation' and

'reference' always refer to a relation between a word (or other sort of

sign, symbol, or representation) and something that actually exists (i.e.

not just an 'object of thought'). There is a sense of 'refer' in which I

can 'refer' to what does not exist; this is not the sense in which 'refer'

is used here. An older word for what I call 'representation' or 'reference'

is denotation.

Secondly, I follow the custom of modern logicians and use 'exist' to mean

'exist in the past, present, or future'. Thus Winston Churchill 'exists',

and we can 'refer to' or 'represent' Winston Churchill, even though he is

no longer alive.

2 A.

M. Turing, 'Computing Machinery and Intelligence', Mind (1950), reprinted in A. K.

Anderson (ed.), Minds and Machines.

3 If

the Brains in a Vat will have causal connection with, say, trees in the future, then perhaps they can now refer to trees by the description

'the things I will refer to as "trees" at such and such a future

time'. But we are to imagine a case in which the Brains in a Vat never get out of the vat, and hence never get into causal connection with

trees, etc.

|